Hi all, this

time we will be discussing about installing Hadoop on windows using Cygwin. For

it, the first step is to install Cygwin on the windows system. U can download

the Cygwin version from here (

http://www.cygwin.com/).

Download and install it. Make sure u select the “OpenSSh” package when it asks

for which packages to include. As:

Now, set the

environment variable (if it is not set by itself while installing) to point to

the installation of Cygwin.

Configure

the ssh daemon. For it, execute the command:

ssh-host-config

When asked if privilege separation

should be used, answer no.

When asked if sshd should be

installed as a service, answer yes.

When asked about the value of CYGWIN

environment variable, enter ntsec.

Now, if the Cygwin service is not

started, go to “services.msc” from run and start the Cygwin service.

Then u need

to do authorization so that it may not ask every time and u can connect to it using

ssh. For this, follow the following steps:

Execute the

command in Cygwin:

ssh-keygen

When it asks

for the file names and the pass phrases, type ENTER (without writing anything)

and accept default values.

After that,

once the command executes fully, type the following:

cd ~/.ssh

To check the

keys, u can do “ls”. U will see the id_rsa.pub and id_rsa files.

If it is the

first time u r using the Cygwin installed on yr system, den it is ok otherwise

u will need to write those values to the authorization value.

For that execute:

cat

id_rsa.pub >> authorized_keys

Now try and

login into yr own localhost by executing:

ssh

localhost

The first

time it will ask for conformation, do yes and den u can see that from next time

u will not have to do it again.

You will

also need to have Java installed on your machine. This is because Hadoop uses

Java also and u will have to set the environment variable “JavaHome”. I have

used Java 1.6 and the tutorial is based on usage of that version.

After u have

downloaded Hadoop, u need to extract it (as it is in zip format). To extract

it, u can type the following command on Cygwin command prompt.

tar -xzf

hadoop-0.20.203.0.tar.gz

The file

name may be different as per the version u have downloaded). Also I have

extracted the Hadoop to the Cygwin folder under C drive. So, commands and paths

may change as per the case may be.

Once it is

done, u can see the contents of the Hadoop file by using the command “ls”.

If u want to

work with Hadoop file system, go into the location where extracted Hadoop is

present and type:

$ cd ../../hadoop-0.20.203.0

$ bin/hadoop

fs -ls

What it

says??? It says about the env. variable JAVA_HOME not set. So, lets set its

path and other configuration files too.

Go to the

Hadoop extracted folder ->

conf ->

hadoop.env.sh (open it to edit)

This is the

file where the environment variables are set. In this, u fill find one

commented line (line beginning with #) as

#export JAVA_HOME=

U need

to un-comment it and then give the path where the Java is installed on

your system.

If u want to give the path, u

will have to include the cygdrive before it to access it. For e.g., if u have

java at location c://Java, then u will have to give the path as:

export JAVA_HOME=/cygdrive/c/Java/jdk1.6.0_27

Or else if

there is some space in between in the path, then u need to have one escape

sequence character. For e.g.,

export

JAVA_HOME=/cygdrive/c/Program\ Files/Java/jdk1.6.0_27

Rest all the

env. variables are set by default.

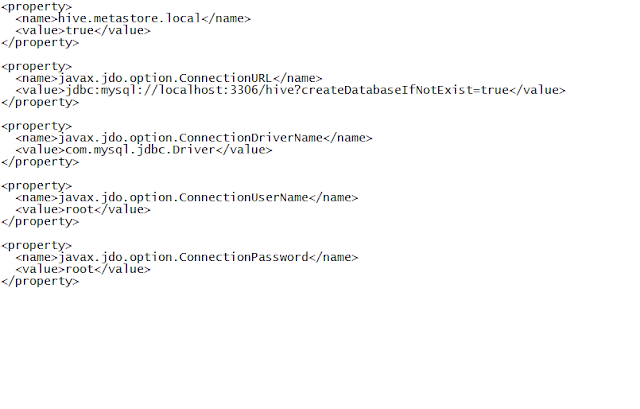

Now we go to

second configuration file, hdfs-site.xml in the same location. In it, write the

following in between the tag configuration:

Here we have

to set the no of replication which should be done for every file. U can set the

value as u want. Here we have set the value as 1.

Now open the

file core-site.xml and give the following properties in it:

U can give

any port number instead of 4440 but it should be free.

Now we have

to make changes to mapred-site.xml file, located at the same location. In it,

write the following:

Here we give

the port number where we want to run the job tracker. U can give any of the

free ports.

All the

configurations are done. U can see the remaining files. U can note that in both

the files “master” and “slave”, it is the localmachine which is acting as both.

Then the

last thing u need to do is to make the namenode available and ready. To do it,

first u need to format it because it is being used for the first time. So type

the following command

I am assuming that u r presently

in the Hadoop folder. If not, type cd

Eg. $ cd ../../hadoop-0.20.203.0

$ bin/hadoop namenode –format

It will give

some information and then will finally format the namenode. Now u need to run

and see it. So, type :

$ bin/hadoop

namenode

And it will

start running. Don’t stop it, instead open new command prompt of Cygwin and go

to the Hadoop location and type:

$ bin/hadoop

fs

It will show

the options u can use with the fs command. Try using some of them like ls etc.

Now, in

another command prompt, run the secondary name node as:

$ bin/hadoop

secondarynamenode

In the third

command prompt, run job tracker as:

$ bin/hadoop

jobtracker

In fourth,

run the datanode as:

$ bin/hadoop

datanode

And in the

last u can run the task tracer as:

$ bin/hadoop

tasktracker

While u were

starting these, u must have noticed the change in the name node. Try reading

those changes and information.